Denoising strengthĭenoising strength controls how much change it will make compared with the original image. It will produce something completely different. These options initialize the masked area with something other than the original image. You can use latent noise or latent nothing if you want to regenerate something completely different from the original, for example removing a limb or hiding a hand.

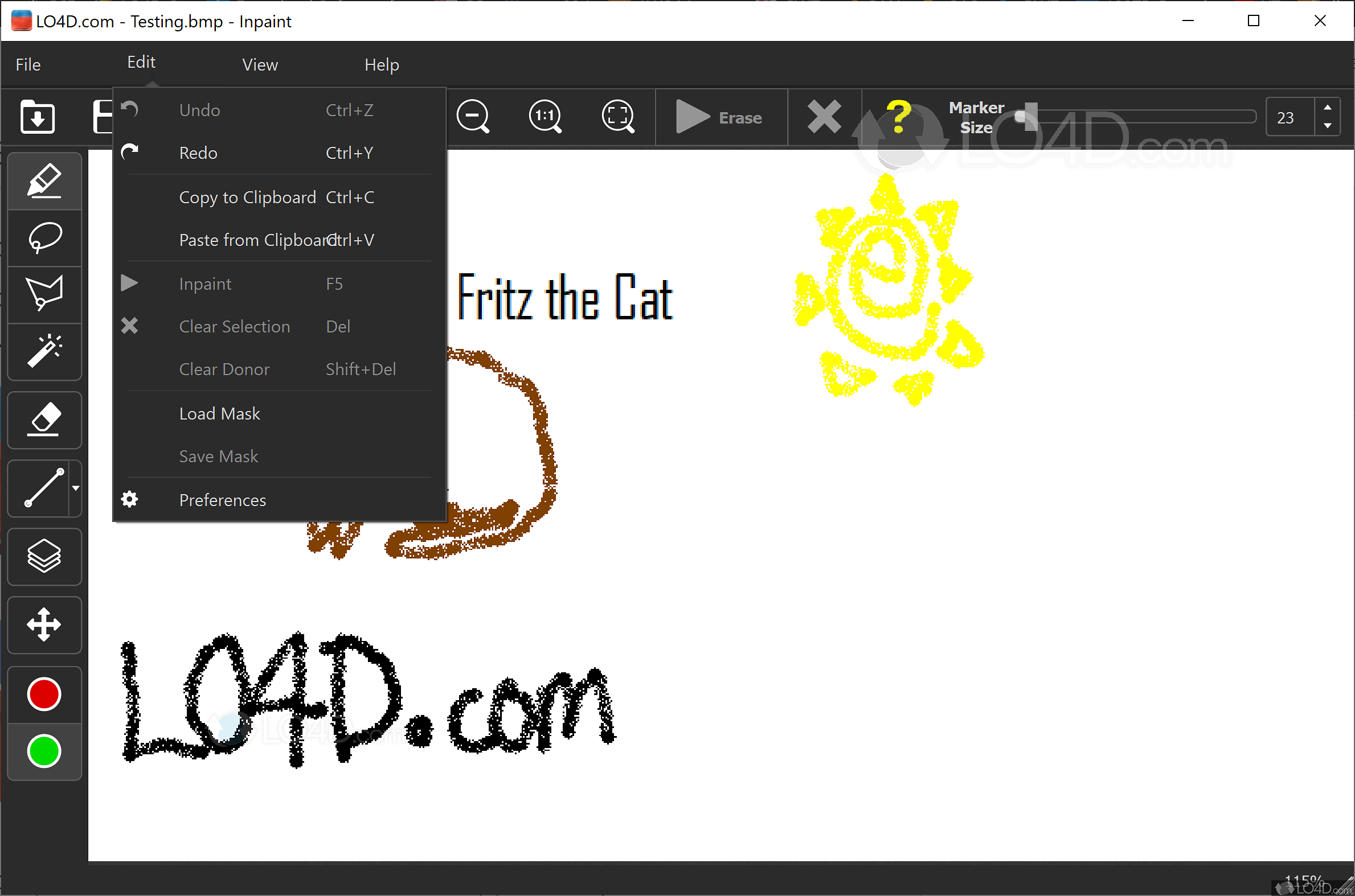

In most cases, you will use Original and change denoising strength to achieve different effects. Original is often used when inpainting faces because the general shape and anatomy were ok. Select original if you want the result guided by the color and shape of the original content. The next important setting is Mask Content. It may also generate something inconsistent with the style of the model. CodeFormer is a good one.Ĭaution that this option may generate unnatural looks. You will also need to select and apply the face restoration model to be used in the Settings tab. If you are inpainting faces, you can turn on restore faces. The image size needs to be adjusted to be the same as the original image. This is like generating multiple images but only in a particular area. You can reuse the original prompt for fixing defects. This is the area you want Stable Diffusion to regenerate the image. Use the paintbrush tool to create a mask. We will inpaint both the right arm and the face at the same time. Upload the image to the inpainting canvas. In AUTOMATIC1111 GUI, Select the img2img tab and select the Inpaint sub-tab. Select sd-v1-5-inpainting.ckpt to enable the model. In AUTOMATIC1111, press the refresh icon next to the checkpoint selection dropbox at the top left.

#Image inpaint install#

To install the v1.5 inpainting model, download the model checkpoint file and put it in the folder stable-diffusion-webui/models/Stable-diffusion But usually, it’s OK to use the same model you generated the image with for inpainting. It’s a fine image but I would like to fix the following issuesĭo you know there is a Stable Diffusion model trained for inpainting? You can use it if you want to get the best result. , (long hair:0.5), headLeaf, wearing stola, vast roman palace, large window, medieval renaissance palace, ((large room)), 4k, arstation, intricate, elegant, highly detailed (Detailed settings can be found here.) Original image I will use an original image from the Lonely Palace prompt:

#Image inpaint how to#

In this section, I will show you step-by-step how to use inpainting to fix small defects. See my quick start guide for setting up in Google’s cloud server. We will use Stable Diffusion AI and AUTOMATIC1111 GUI.

0 kommentar(er)

0 kommentar(er)